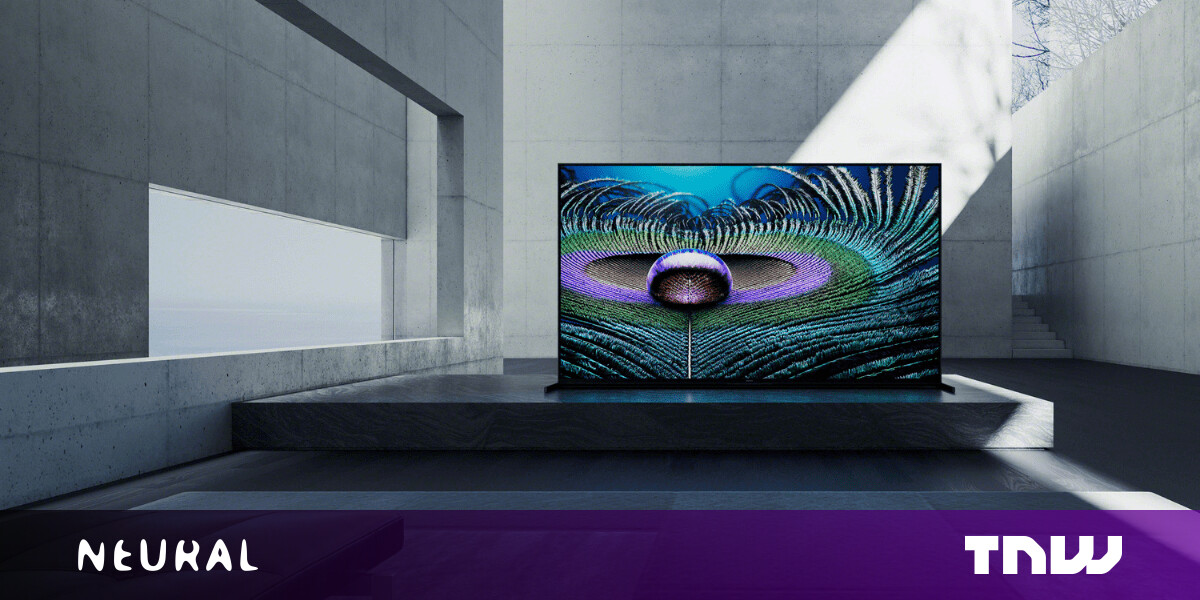

Sony has unveiled a new line of Bravia TVs that “mimic the human brain” to reproduce the way we see and hear.

The devices use a new processing method that Sony calls “cognitive intelligence”. The company says it goes beyond standard AI to create an immersive visual and sound experience:

While conventional AI can only detect and analyze image elements such as color, contrast and details individually, the new Cognitive Processor XR can cross-analyze a series of elements at once, just like our brains. In doing so, each element is adjusted to its best end result, together with the others, so that everything is synchronized and similar to life – something that conventional AI cannot achieve.

The processor divides the screen into zones and detects the focal point in the image. It also analyzes the positions of the sound in the signal to match the audio with the images on the screen.

[Read: Meet the 4 scale-ups using data to save the planet]

In a video demonstration, Sony said expanding the size of TVs made viewers focus on parts of the screen instead of the entire picture – as we do when we see the real world.

“The human eye uses different resolutions when we look at the entire image and when we focus on something specific,” said Yasuo Inoue, a signal processing expert at Sony.

“The XR processor analyzes the focal point and refers to that point while processing the entire image to generate an image close to what a human sees.”

It is impossible to say how well AI works without seeing TVs in person. If you want to test yourself, you probably need deep pockets.

The price and availability of the new line will be announced in the spring.

Published on January 8, 2021 – 17:17 UTC