While American operators are busy marketing their new 5G networks, the reality is that the vast majority of people will not experience the advertised speeds. There are still many parts of the United States – and around the world – where data speeds are slow, so to compensate, services like Google Duo use compression techniques to efficiently provide the best possible video and audio experience. Google is now testing a new audio codec that aims to substantially improve audio quality over poor network connections.

In a blog post, Google’s AI team details their new high-quality, very low bit rate voice codec that they called “Lyra”. Like traditional parametric codecs, Lyra’s basic architecture involves extracting distinct speech attributes (also known as “resources”) in the form of log honey spectrograms that are then compressed, transmitted over the network and recreated at the other end using a generative model . Unlike more traditional parametric codecs, however, Lyra uses a new high-quality audio generator model that is not only able to extract critical speech parameters, but is also able to reconstruct speech using minimal amounts of data. The new generative model used in Lyra is based on Google’s previous work on WaveNetEQ, the package loss hiding system based on the generative model currently used in Google Duo.

Basic architecture of Lyra. Source: Google

Google says its approach has made Lyra on a par with the next-generation waveform codecs used on many streaming and communication platforms today. Lyra’s advantage over these next-generation waveform codecs, according to Google, is that Lyra does not send the signal sample by sample, which requires a higher bit rate (and therefore more data). To overcome concerns about the computational complexity of running a generator model on the device, Google says that Lyra uses a “cheaper recurring generator model” that works “at a lower rate”, but generates several signals in different frequency ranges in parallel which are subsequently combined “into a single output signal at the desired sample rate.” Running this generative model on a medium-range device in real time results in a 90 ms processing latency, which Google says is “in line with other traditional speech codecs “.

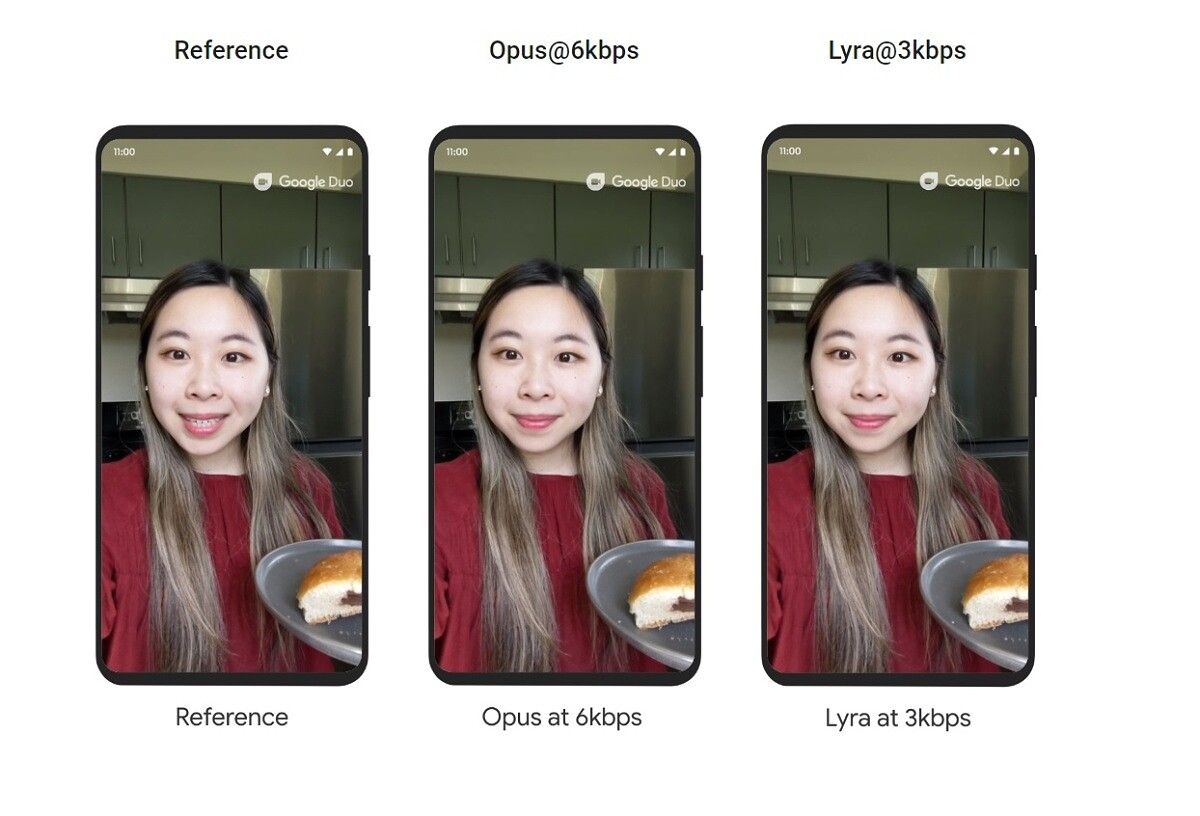

Paired with the AV1 video codec, Google says that video chats can take place even for users on an old 56 kbps dial-up modem. That’s because Lyra was designed to operate in environments with high bandwidth restrictions, such as 3 kbps. According to Google, Lyra easily outperforms the royalty-free open-source Opus codec, as well as other codecs like Speex, MELP and AMR at very low bit rates. Here are some speech examples provided by Google. Except for Lyra-encoded audio, each of the speech samples suffers from degraded audio quality at very low bit rates.

Clean Speech

Original

[email protected]

[email protected]

[email protected]

Noisy environment

Original

[email protected]

[email protected]

[email protected]

Google says it trained Lyra “with thousands of hours of audio with speakers in more than 70 languages, using open source audio libraries and then checking the audio quality with skilled and crowdsourced listeners.” As such, the new codec is already being launched on Google Duo to improve call quality over very low bandwidth connections. Although Lyra is currently focused on speech use cases, Google is exploring how to turn it into a general-purpose audio codec.